last year I was contacted by la phase 5 , they needed help with the WebGL part of their 2021 wish card.

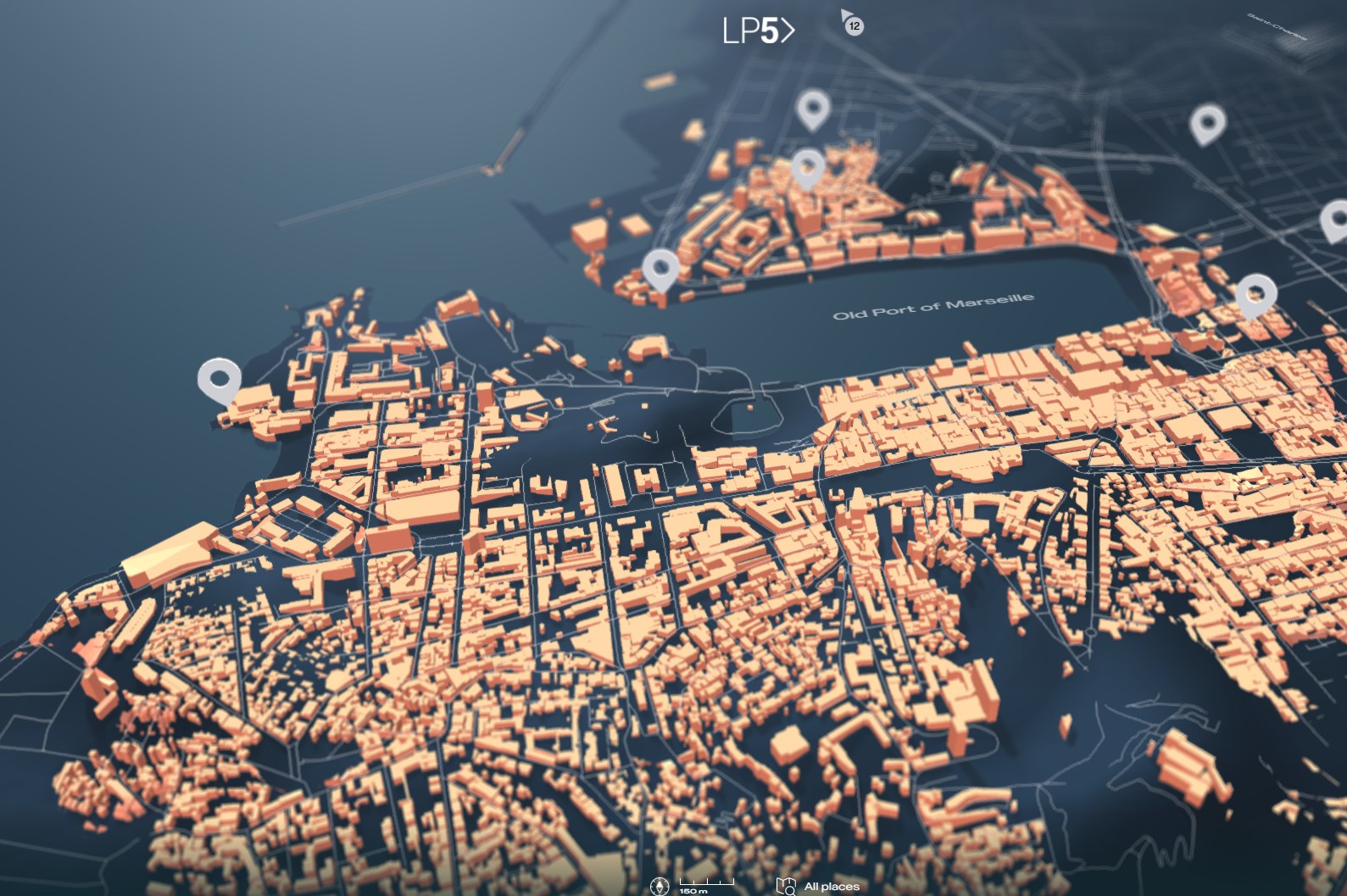

they’re based in Marseille, the second biggest french city, by the mediterranean sea. it’s quite a unique city, both classy and cheap, packed and desert, La Phase 5’s plan was to write a love letter to their city, it is a “guided tour” of Marseille, in WebGL, with a curated list of handcrafted 360° panoramas.

a “fun little project”

I like maps a lot, when they asked for a map I was all warm and fuzzy and given my previous researches, I was fairly confident that a 3D version could work.

after some discussions, it appeared that we wouldn’t be able to model the city at the scale we needed it ; Axel Corjon the POWER Art Director I worked with, managed to create a model of Marseille in Cinema4D and ended up with roughly 4M triangles which is still a tad too much for real time rendering in a browser :)

we could have modeled a “toy version” of Marseille but it went against the second part of the project that was about revealing 360° panoramas of actual places in the city.

that’s possibly when the fun little project turned into a sea dragon (the bad kind).

data acquisition and pre-process

the problem was that modeling the whole city in 3D meant using a tile map system which added a touch of preprocessing.

first we needed data, to do this, I:

- downloaded the “Provence Alpes-Cote-d’Azur” PBF from geofabrik

- then used Osmconvert to crop a sub region (lat/lng boundingbox) and save it as PBF

- then used Osmosis to filter out amenities

- then used mapsplit to split the cropped area into individual PBF tiles (slippy or XYZ tiles)

- then used osm2geojson to convert the PBF tiles to individual GEOJSON to manipulate them easily with python

- then used maperitive to check the data (not required but very handy!)

- then used custom python scripts to filter out amenities further and create custom tiles

essentially:

|

1 2 3 4 5 |

//crop a region and save as PBF .\osmconvert.exe paca.pbf -b=5,44,6,45 --out-pbf -o=paca_crop.pbf //split OSM to tiles java -Xmx6G -jar mapsplit-all-0.2.1.jar -tvm -c -i paca_crop.pbf -o tiles/t_%x_%y_%z.pbf -z 16 |

where -b is the bounding box of the area to crop, additionally, I fetched the corresponding elevation tiles from the amazon LTS, to create the terrain.

WHY?! you ask, let me illustrate.

below is a rendition of the tilezen vector tileset at zoom level 15, buildings are rendered in red, those would be the shape we extrude to create the buildings

sparse, boring.

now here’s roughly the same spot at zoom level 16: now we’re talking :)

now we’re talking :)

for legibility & performance reasons, we don’t need all the info at lower zoom levels. that’s why most tile providers don’t bother and simply discard smaller buildings at lower zoom levels based on their surface and ‘importance’ (a tiny but famous landmark may still be visible at lower zoom levels). so, to get the required precision upfront and have the ability to isolate amenities by type (buildings, roads,…), we needed to work with the “complete” dataset, the equivalent of the zoom level 16+.

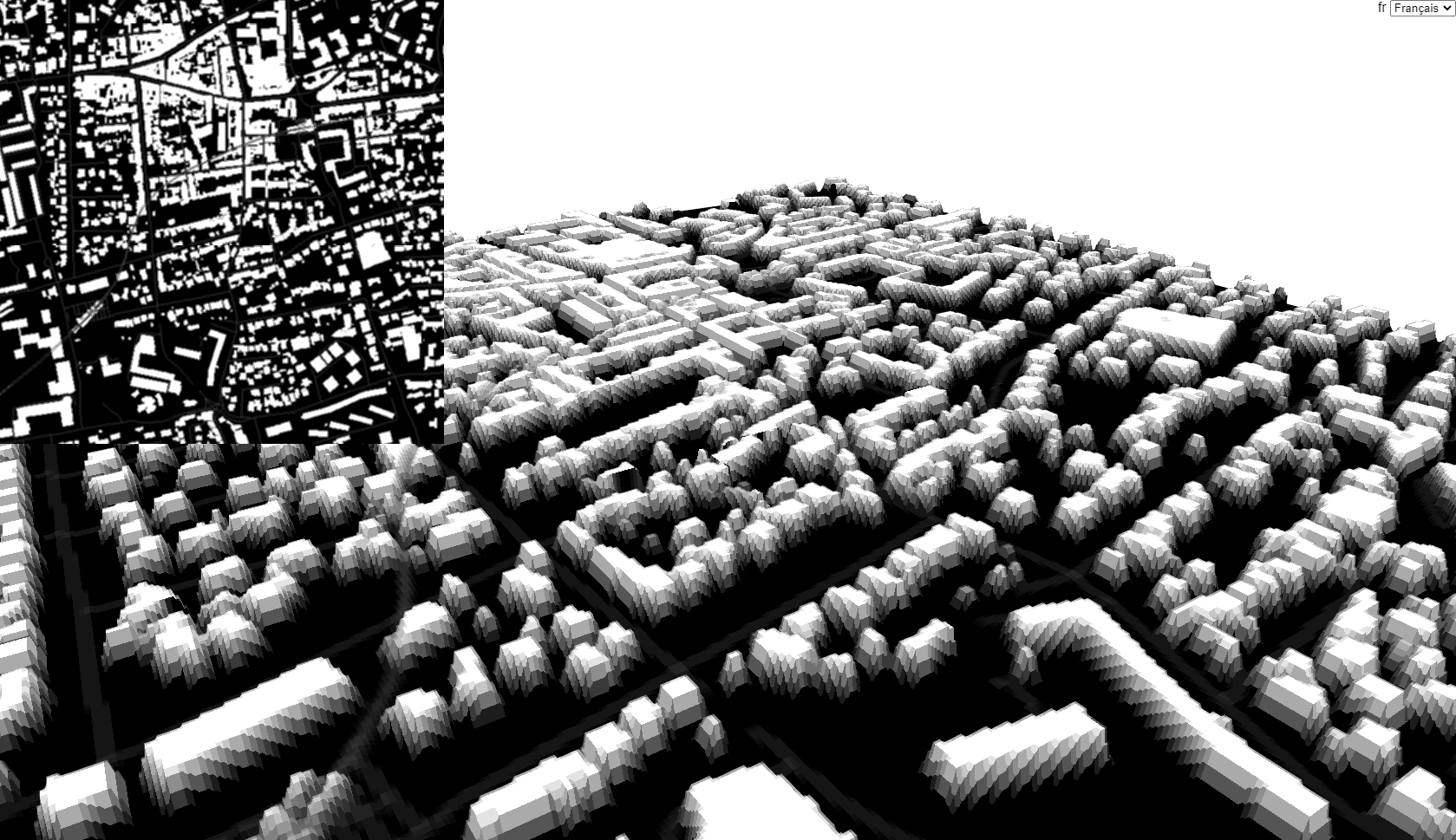

my first idea was to create a heightmap from the buildings geodata and displace a plane. it would have allowed to easily merge the altitudes into the tiles (buildings would use the red channel, roads, the green and altitude would use the blue).

images are also very easy to work with so the bulge effect was practically free.

it sort of worked, at least it was not uninteresting and even worked with very thin lines (the roads)

I liked the version above, it came from using binary ( black or white ) values to draw the buildings and add a small blur, very crisp, just not the intended aesthetics.

below is a “merged” tileset test

the tile combines 3 layers: the buildings in the red channel, the roads in the green channel and the altitude in the blue channel, the altitude is subtle but shows on the horizon.

raster tiles proved to be limited, especially as it sneakily re-introduced the precision issue ; as the geometric grid doesn’t correspond to the the pixels, it gives the impression that the buildings “slide” over the geometry instead of being solidly anchored in the geometry, not what we wanted.

I tried a couple of things to create meshes, was interested in TIN but it takes a Geotiff as an input and I didn’t manage to create it, try later.

now the nice thing with having vector data is that we can apply various operations onto it. I pushed the pre-process a bit further and simplified, triangulated each building Polygon with OPENCV, then extruded and stored the tiles as binary objects.

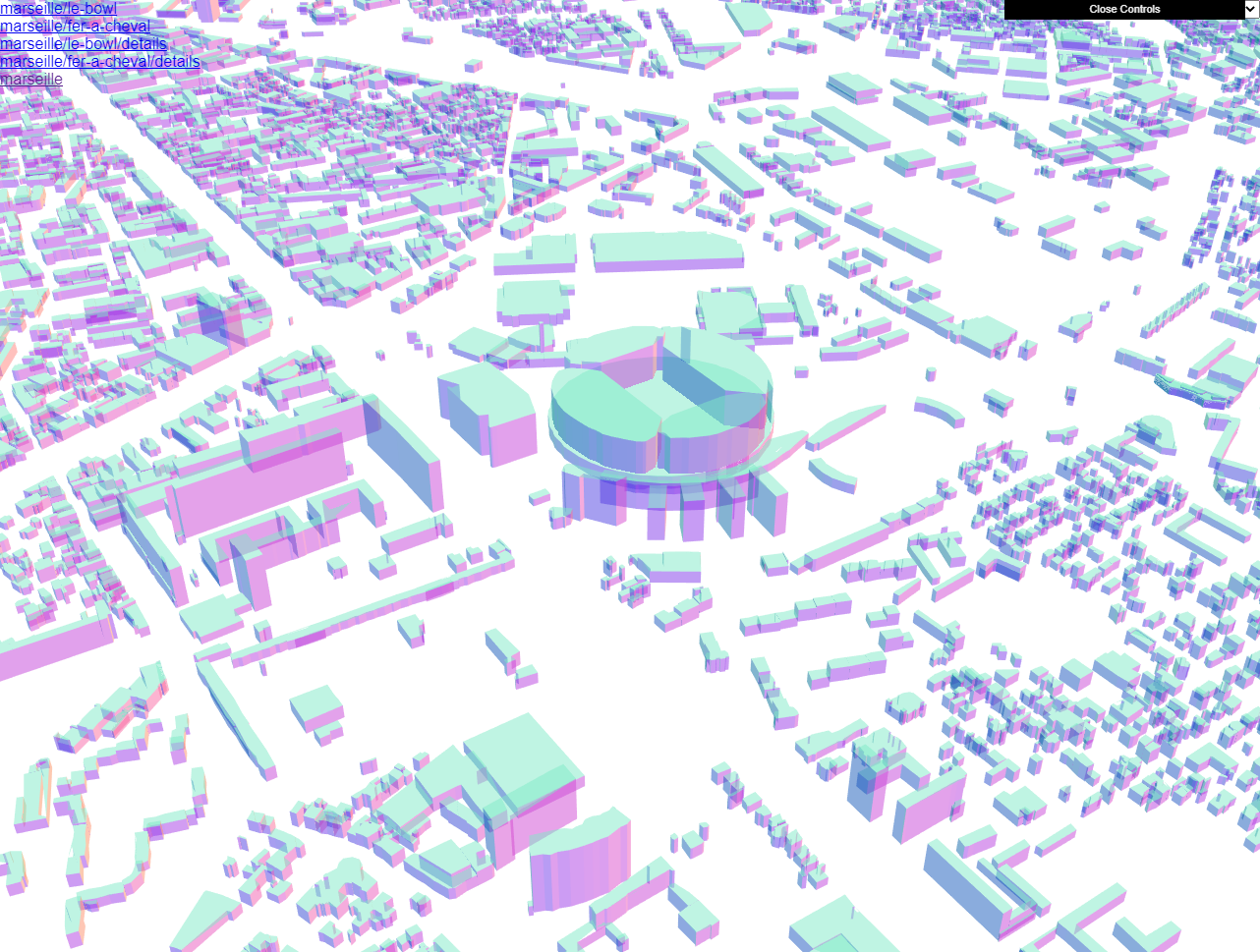

and voilà! 3D tiles!

it’s not perfect and there are some holes in the caps but it’s good enough when seen from afar and it looks cool which is the only relevant metric.

it’s not perfect and there are some holes in the caps but it’s good enough when seen from afar and it looks cool which is the only relevant metric.

behold! the embodied nightmare of tile providers and GPU makers alike: a WebGL map of Marseille at zoom 19 ( ~3.5M triangles )

so dense, so yummy.

and the good part is that some buildings are well documented like this stadium

which brings a great sense of detail and creates some happy accidents while navigating the city.

by the way, the OSM data contain a unique ID per feature so when making the tiles, I made sure to store them to prevent overlapping buildings as they may belong to various tiles. it’s even more important with roads that can cross many tiles and appear in each of the tiles. if it gives lighter tiles and prevents z-fighting, the tradeoff is that they’re not sorted : a tile may load with some “missing” buildings that appear later when the neighbouring tiles load.

a further optimisation would consist in merging the tiles ; for now each tile contains roughly 2K vertices, I could have merged them 4 by 4 or up to a fixed size but this would have induced different loading strategies. I could also have simplified the paths more aggressively but as such, it’s not perfect but it works

navigation & tile management

a quick note about navigation: everything is located with a regular tile map engine, as if they were raster tiles.

recomputing individual GPS coordinates of each building polygon would be prohibitively expensive for the CPU, so the 3D tiles coordinates were computed in a 256² space to match the size of a raster tile. the engine then only places the 3D tiles according to the top left lat/lng of the tile, at zoom level 16. this still gives very high position values (in the tenth of millions units) so everything is offset by “minus the center” of the map, this prevents very high positions values that cause the renderer to glitch because of the depth map precision (yes, even with a logarithmic depth buffer).

there’s a second benefit in offseeting everything by minus the center of the map: the camera target is always at 0, 0, 0 !

the map has a “display radius” that manages the tiles’ visibility and there’s a vertex shader that handles the tiles’ apparition, I used the rather crypitc yet so handy onBeforeCompile method of three.js’ materials.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

//material is a MeshStandardMaterial instance let uniforms = { radius: { value: 1 } }; material.onBeforeCompile = (m) => { m.uniforms = Object.assign(m.uniforms, uniforms); let vs = m.vertexShader; vs = vs.replace( "#include <common>", `#include <common> uniform float radius; float noise(vec2 p){ vec2 ip = floor(p); vec2 u = fract(p); u = u*u*(3.0-2.0*u); float res = mix( mix(rand(ip),rand(ip+vec2(1.0,0.0)),u.x), mix(rand(ip+vec2(0.0,1.0)),rand(ip+vec2(1.0,1.0)),u.x),u.y); return res*res; }` ); vs = vs.replace( "#include <displacementmap_vertex>", `#include <displacementmap_vertex> float r = length( (modelMatrix * vec4( transformed, 1. ) ).xyz ) / radius; r = 1. - smoothstep( .6, .75, r + .5 * ( noise(transformed.xz * 0.01 ) - noise(transformed.xz * 0.0001 ) ) ); transformed.y *= min( 1., r );` ); m.vertexShader = vs; }; |

I control the height of the buildings by adding noise depending on the distance to the center of the world (which is … 0, 0, 0 ! thank you offset :))

terrain and water

for the terrain, I thought I’d use a tileset, there’s a 1:1 match with the building tiles’ locations, the data is readily available through the amazon LTS and it’s easy to compute.

well, think again tiger! couldn’t figure out exactly why but some seams appeared in 3D when they don’t show on a 2D map. tyro (<check this out!) suggests to blend the seams by using the values of the neighbouring tiles. given the tile based nature of my map, it was too complex to set up (need to recompute up to 9 textures each time a tile was loaded).

so instead I collected all the altitudes with this excruciatingly slow python method:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

import cv2 # convert the elevetion to an altitude in meters def decodeUint8Altitude(pixel): b,g,r = pixel return ( r * 256 + g + b / 256) - 32768 #this parses each pixel of an image def filter( img ): height, width, color = img.shape res = np.zeros( (img.shape[1], img.shape[0] ), dtype=np.float ) for y in range(height): for x in range(width): for c in range(color): res[y, x] = max(0, decodeUint8Altitude(img[y, x]) ) return res #call res = filter( cv2.imread( "tile.png", cv2.IMREAD_UNCHANGED ) #res now contains the altitudes as floats |

I saved the altitude tiles as 8bit pngs with a 3 meters increment (not very precise but ok-ish) and computed a big png file of the altitudes, hoping to use it as a displacement map in blender.

meanwhile I also triangulated and exported the land and water from my vector data, and merged them into OBJ files. in Blender, I cleaned them up manually, there are some insanely cool tools to decimate edges and create faces! ( edit mode > edge mode : select > select similar, mesh > cleanup > decimate geometry, you’re welcome :) )

it worked nicely for the water but unfortunately, the grid of the landmass mesh was too regular and not well defined enough so I gave up.

first I though I’d use the altitudes texture as a displacement map in blender but instead, I used the https://elevationapi.com/ to produce a mesh, manually aligned it to the coastline and decimated it to reach a reasonable triangle count. I used a smaller version of the altitudes’ PNG to locate the surface and prevent the camera from going through the ground. as the ground mesh is much coarser than a tileset, I couldn’t get the roads to “stick” to the ground nicely so they float above the terrain, guess I’ll have to live with it.

360° panoramas & titles

the map was fun already but there was a second important feature: 360° panoramas.

I happened to work on Hopper the Explorer and already knew a thing or two about photospheres, this time they needed a fresh take on panoramas. I suggested to use a Machine Learning model to infer depth and make the sphere appear using a depth map.

the first transition is seen from outside the sphere to give an idea of how the geometry is distorted when using the depth map, the last bit of the video shows how it looks from inside, the intended effect, the red ribbon is the text that was supposed to be partially occluded by the foreground. this is the test I did to convince them it was a good idea:

and the least I can say is that they were not convinced ^^’

so we dumped the depth maps and changed for a much more qualitative approach, closer to the art direction: Axel created 2D masks for the foreground to partially hide the text and did some transition mockups using an optics compensation effect (aka a pincushion effect).

the end result looks somewhat like this (better check it live though).

here’s the fragment shader used during the post processing, it takes 2 textures as input, tDiffuse the map, tSphere, the panorama, + a mixer value.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

uniform float mixer; uniform sampler2D tDiffuse; uniform sampler2D tSphere; varying vec2 vUv; //idea //https://www.decarpentier.nl/lens-distortion //distort method from // https://www.imaginationtech.com/blog/speeding-up-gpu-barrel-distortion-correction-in-mobile-vr/ vec2 distort(vec2 st, float alpha, float expo ){ vec2 p1 = vec2(2.0 * st - 1.0); vec2 p2 = p1 / (1.0 - alpha * length( p1 ) * expo ); return (p2 + 1.0) * 0.5; } void main() { float t = mixer; float tr = smoothstep( 0.75,1., t ); float e = t * 4.; //stretch out city scene vec2 uv = distort( vUv, -10. * pow( .5 + t * .5, 32. ), e ); vec4 city = texture2D( tDiffuse, uv ); //pinch in sphere scene uv = distort( vUv, -10. * ( 1. - tr ), e ); vec4 sphere = texture2D( tSphere, uv ); //blend gl_FragColor = mix( city, sphere, tr ); } |

the titles needed to be animated letter by letter and localized, Axel pointed me to the JS library they use at Fonts Ninja and I was impressed!

opentype.js will give you access to the vector data of a font, with a proper kerning, it allowed to write arbitrary texts on the fly (I should probably start using SDF Fonts at some point).

here’s a test of the split letters word

and a quick animation test.

one of my regrets is not to have had more time to polish the text animation.

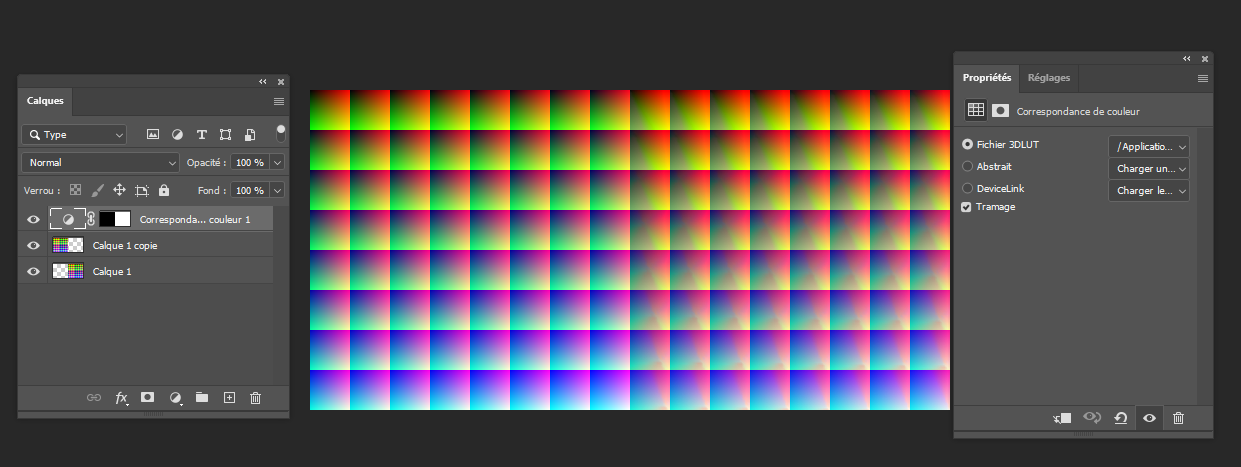

Post processing LUT

at some point Axel told me he used Photoshop LUTs (Look Up Table) to beautify the C4D renders and asked if we could have this on the website. little did I know how much of a pain it would be. first, a LUT is a tool to transform a pixel value, like a filter. to disambiguate, what most people call a LUT is often a palette or gradient mapping ; using a greyscale image input, we fetch the corresponding value in a palette (often a gradient) and replace the greyscale value with the color. here’s a three.js example: https://threejs.org/examples/?q=lookuptable#webgl_geometry_colors_lookuptable so it’s usually limited to 256 discrete values and gives a very cartoon look to the final image ( it works very well for cell shading ).

photoshop LUTs map each pixel of an image to a 32768 (32^3) RGB values and give much more subtle results.

FUN FACT three.js does have a 3D LUT post processing pass since r122, I was simply using the r120 and by the time I realized it was in there, I had a working alternative. I’m leaving the code here for “learning purpose” or in case the 3D LUT uses 3D textures (I haven’t checked, I’m still too pissed!)

to illustrate, the image below is the LUT I used, as rendered by photoshop. on the left hand side, it’s a series of 64* ( 64 * 64 pixels ) quads that gradually brighten up. think of it as the slices of a box made of 63^3 cubes. on the right hand side, the LUT was applied to the same grid and – hopefully – you see that the colors behave differently. used on an image, this will produce the (in)famous Instagram filter look.

in the app, we’ll use the right hand texture as our LUT.

I asked some creative dev friends for help, they pointed to Matt DesLauriers implementation which helped a lot, I then used it as part of the post process pipeline. for the record here’s the code.

first a python script that generates the grid above:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import cv2 import numpy as np size = 64 buffer = np.zeros( (512, 512,3), dtype=np.uint8) for b in range( 0, size ): x = ( b % 8 ) * size y = ( int( b / 8 ) ) * size for r in range( 0, size ): for g in range( 0, size ): buffer[ y+g:y+g+1, x+r:x+r+1, 0 ] = int( b * 4 ) buffer[ y+g:y+g+1, x+r:x+r+1, 1 ] = int( g * 4 ) buffer[ y+g:y+g+1, x+r:x+r+1, 2 ] = int( r * 4 ) cv2.imwrite( "lut_src.png", buffer ) |

this is not required by the app but it can help checking in photoshop and/or debug in WebGL.

the ShaderPass goes like:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 |

let LUTShader = { uniforms: { tDiffuse: { value: null }, tLut: { value: null }, opacity: { value: 1.0 }, }, vertexShader: ` varying vec2 vUv; void main() { vUv = uv; gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 ); }`, fragmentShader: ` uniform float opacity; uniform sampler2D tDiffuse; uniform sampler2D tLut; varying vec2 vUv; vec4 lookup(in vec4 textureColor, in sampler2D lookupTable) { //square index by blue level (linear ; it gives the cell index in a 'flat' array) float b = ( textureColor.b ) * 63.; // current cell id : we remove the fractional part to "snap" to the previous integer (ie 12.3564546 > 12) float fb = floor( b ); // current cell uv // the x position of the cell in the grid is given by : mod( cellId, columns) // the y position of the cell in the grid is given by : floor( cellId / columns) vec2 uv0 = vec2( mod( fb, 8. ), floor( fb / 8. ) ); // and everything is downscaled by : 1 / columns ( 1. / .8 in this case as 64 px cell size = 512 px texture size / 8 ) float grid_cell = 1. / 8.; //this is the top left corner of the cell uv0 *= grid_cell; //we need to "pad" the cell to make sure we hit the center of the cell's corner pixels //padding = half a pixel so: .5 / 512. float padding = .5 / 512.; vec2 cell_offset = vec2( padding ); //the must be a little smaller too: the width = 2 vec2 cell_size = vec2( grid_cell - 2. * padding ); //we add the offset to the uv and finally we lerp the uv between the top left and bottom right corners of the cell //we use the r an g value of the texel uv0 += cell_offset + textureColor.rg * cell_size; // next cell id and uv (same as above with the next index in the 'flat list' ) float cb = ceil( b );//min( fb+1., 63. ); vec2 uv1 = grid_cell * vec2( floor( mod( cb, 8. ) ), floor( cb / 8. ) ); uv1 += cell_offset + textureColor.rg * cell_size; //flip Y uv0.y = 1. - uv0.y; uv1.y = 1. - uv1.y; //now we sample the 2 colors from the lookup table vec4 c1 = texture2D(lookupTable, uv0); vec4 c2 = texture2D(lookupTable, uv1); //and mix them using the fractional part of the ( blue value * cell count ) return mix(c1, c2, fract( b ) ); } void main() { vec4 texel = texture2D( tDiffuse, vUv ); gl_FragColor = lookup( texel, tLut ); } `, }; export { LUTShader }; |

and finally, to use it with three.js effectComposer:

|

1 2 3 4 5 6 7 8 9 10 11 |

renderer.autoClear = false; let composer = new EffectComposer(renderer); const renderPass = new RenderPass(scene, camera); composer.addPass(renderPass); let LUTPass = new ShaderPass(LUTShader); let loader = new TextureLoader(); loader.load("/textures/lut.png", (t) => { LUTPass.uniforms.tLut.value = t; t.magFilter = t.minFilter = LinearFilter; // important! }); composer.addPass(LUTPass); |

I wanted to add a bokeh filter too but it was deemed to CPU intensive and was removed (still available in the debug panel under/params/postprocess) .

wrap up

what a “fun little project”! I learnt a lot and it was so nice to work with very skilled people, Axel (AD, 2D/3D motion & visuals…) & Emmanuel (Back, Front, Photos…) are very talented and very demanding. I like that very much.

by the way the debug panel is still active, you can try : https://marseille.laphase5.com/?debug=true and play with some of the stuff, overshoot the LUT, add bokeh, change the colors…

enjoy! :)

Hello, I am a Korean student who was impressed by reading this article and wants to create a similar web.

May I know what kind of filtering you went through with osmosis?

hello,

to be honest, I don’t remember exactly what I did but it involved a lot of trial and error…

if it’s a student (non-commercial) project, I think you can use the tilezen vector tiles (https://tilezen.readthedocs.io/en/latest/).

make sure to download the tiles you need locally, to leave their servers in peace :)

creating an interactive map is very challenging “as is” and computing the tiles from scratch adds a fair amount of complexity.

also I came across this blender plugin https://github.com/vvoovv/blender-osm/wiki/Premium-Version that extracts an area from OSM and converts it into a mesh.

it can be handy if you don’t need to move around.

good luck for your project

thank you for answering!

Still, I really want to study and research this map.

If it’s okay with you, is it okay if I ask you things you don’t know?

I’ve now made pbf tiles using mapsplit and it’s so hard to convert them to geojson! Can you tell me how to use osm2geojson ?

Hello, Nicolas!

Could you please tell me how much time did it take to develop the map?

And how much did it cost?

hey,

my part took 6 weeks and cost ~20K€

there was also a senior art director ( important ! ) and a senior front end dev ( to create the contents, the UI and fix many many little details )

Hi, Nicolas!

Could you please advise what can be simplified to save budget and development time resources but still make it visually close to the map of Marseille?

hey,

to cut the budget: use an existing tile service and an existing rendering library like MapboxGL or Google Maps (it now features 3D buildings and may let you customize them).

this will save weeks (months?!) of work and mechanically shrink the budget, especially on the 3D part which is a massive pain (trust me ^^).

you can’t expect to have something as customized though + you’ll rely on a third party provider (they ‘ll charge you for each user, possibly making it more expensive in the long run).

Hey!

Thanks for your answer!

Yeah, we’ve looked at Google Maps and found such problems: it’s possible to set the color only to all 3D buildings and they appear only with a certain zoom.

Is this really the case or is there a way around it?

Can you tell me how to do it, if it’s possible?

Sometimes, Mapbox has overlays of 3D buildings on each other when using different zooms.

Thank you!